Here is the ultimate guide to SERP Scraper APIs. Read on.

Search engine results pages (SERPs) are the mines for digital marketers to optimize their websites. Whether you’re monitoring competitors, tracking keyword positions, or feeding machine learning models, scraping SERP data has become essential for businesses, SEO specialists, data scientists, and developers.

But web scraping isn’t a walk in the park — especially when it comes to SERP scraping, where IP bans, CAPTCHA, location targeting, and data accuracy pose relentless challenges.

Enter the SERP Scraper API — a specialized solution designed to bypass these headaches and deliver clean, structured, real-time search engine data at scale.

In this comprehensive guide, we’ll explore what SERP Scraper APIs are, how they work, and why they are indispensable. We’ll also break down the top three industry leaders — Oxylabs, Webshare, and Decodo (formerly Smartproxy) — and what makes their offerings stand out in this evolving ecosystem.

Table of Contents

What Is a SERP Scraper API?

A SERP Scraper API is a web-based service that allows users to automatically extract search engine results in real-time by making API calls.

These APIs bypass traditional scraping limitations like rate limits, CAPTCHAs, and dynamic rendering, offering reliable, scalable, and legal ways to collect SERP data across Google, Bing, Yahoo, Yandex, and more.

Core Features Typically Include:

- Real-time & scheduled scraping

- Location-specific results

- Device-type targeting (desktop/mobile)

- Structured JSON/HTML response

- CAPTCHA-solving & proxy rotation

- Support for organic, paid, map, news, image results

Whether you’re tracking hundreds of keywords or collecting millions of data points, a solid SERP Scraper API ensures that you can extract search data without friction.

Why SERP Scraping Matters More Than Ever?

In a digital world governed by visibility, SERP data is everything. Businesses and analysts rely on SERP insights for:

- SEO strategy: Monitor keyword performance, detect ranking drops, and analyze SERP features.

- Market intelligence: Track competitors’ ads, brand mentions, and product listings.

- Ad verification: Confirm the presence and accuracy of paid ads across different locations.

- Trend detection: Analyze news, featured snippets, and question boxes to tap into emerging search behavior.

- SERP volatility: Detect algorithm changes and measure volatility indices for informed decisions.

Challenges of SERP Scraping Without an API

Trying to manually scrape SERPs with DIY scripts and browser bots is a recipe for frustration:

- IP blocks & bans: Major search engines detect scraping behavior and block suspicious IPs.

- CAPTCHAs: Solving them at scale is inefficient and unreliable.

- Rate limits: Without sophisticated throttling, your tools are quickly shut down.

- Geo-targeting: Scraping localized results (e.g., New York vs. London) requires rotating residential or mobile IPs.

- Parsing complexity: Dynamic JavaScript content is tough to handle without headless browsers.

This is why enterprise-grade SERP Scraper APIs have become the tool of choice for serious data operations.

Top SERP Scraper API Providers – EDITOR’S CHOICE

Below, we examine three industry leaders that dominate the SERP API landscape with robust infrastructure, reliability, and scale: Decodo, Oxylabs, and Webshare.

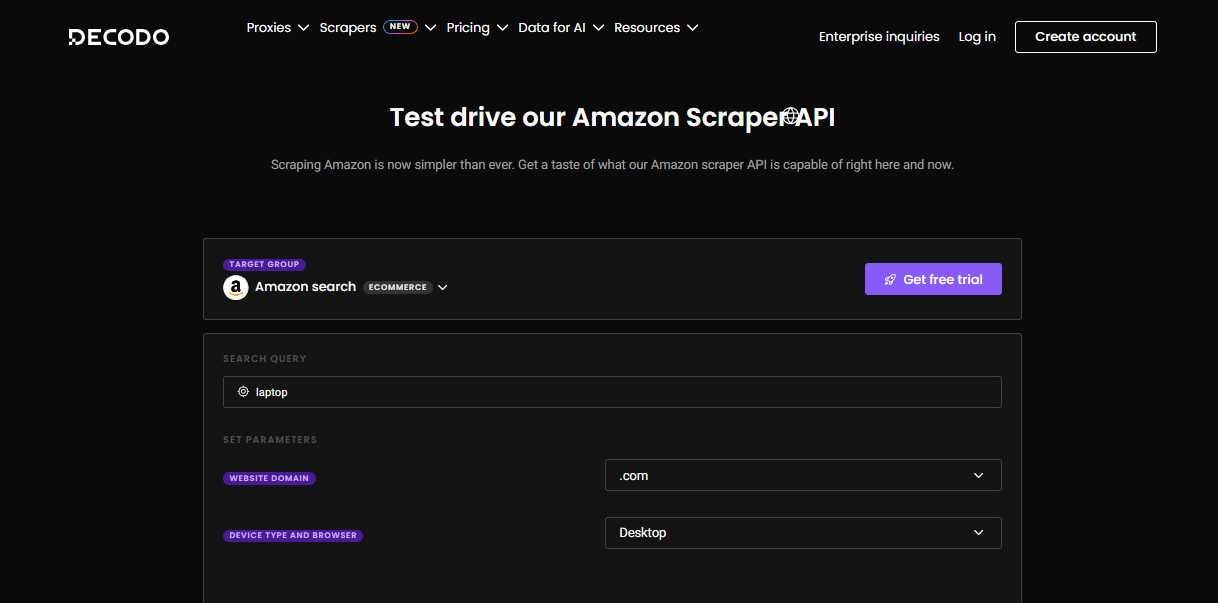

🥇Decodo (formerly Smartproxy) – The Rebrand with Muscle

Decodo (formerly Smartproxy) is loved by 130K+ users around the globe for its ease of use, fast-responsive support, and high-quality solutions. With its fresh new identity, Decodo continues to offer one of the most dev-friendly and powerful SERP scraping API in the market.

Key Features:

- Free AI Parser

- Advanced geo-targeting

- Built-in proxy management

- Flexible output formats

- Ability to collect data from Google Search, Google Images, Shopping, and News tabs

Why Decodo Stands Out:

Decodo is a versatile choice that scales beautifully for both beginners and heavy-duty projects. Whether you’re scraping 100 or 1,000,000 SERPs, their Web Scraping API is built to scale together with your projects.

Additional Benefits:

- JavaScript rendering

- 100% success rate

- Real-time and on-demand scraping tasks

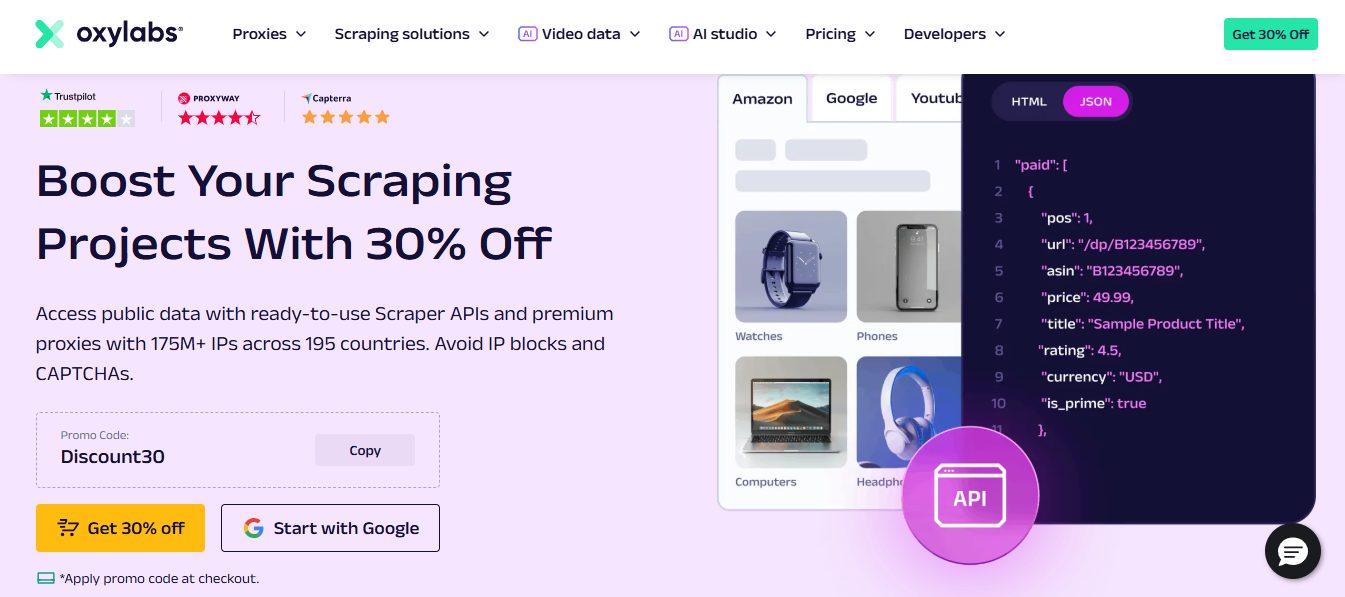

🥈Oxylabs SERP Scraper API – The Enterprise Titan

Oxylabs is widely recognized for its premium-grade infrastructure and enterprise-class data delivery. Their SERP Scraper API stands out due to its coverage, speed, and accuracy.

Key Features:

- ✅ Real-time scraping with a 100% success rate

- 🌐 Coverage for Google, Bing, Yandex, Baidu, and other regional engines

- 🎯 Geo-targeted SERPs — scrape by country, city, or even ZIP code

- 🔐 Captcha solver and proxy management built-in

- ⚙️ JSON & HTML support

- 📊 Batch keyword support

Why Choose Oxylabs?

Oxylabs is ideal for large-scale, mission-critical SERP monitoring. Their infrastructure is unmatched for volume, uptime, and global reach. It’s the go-to choice for enterprise SEO platforms, e-commerce brands, and financial analytics firms.

Developer Friendliness:

- Excellent documentation

- 24/7 customer support

- SDKs available for Python, Node.js, etc.

🥉 Webshare SERP API – The Smart Cost-Effective Contender

Webshare strikes a powerful balance between affordability and performance. Known for its generous free plans and robust proxy network, it offers a clean and reliable SERP scraping experience tailored to startups, agencies, and mid-size businesses.

Key Features:

- ✅ Fast SERP scraping with automatic retries

- 🌎 Worldwide geo-targeting

- 🔁 Proxy rotation and user-agent management

- 🛡️ CAPTCHA bypass

- 🧩 Supports organic, news, map packs, and ads data

What Makes Webshare Different?

- Affordable pricing tiers – Webshare’s transparent pricing makes it accessible to startups

- No learning curve – API is easy to implement with quick start guides

- Free credits to test – Generous free tier for trial and evaluation

Best Use Cases:

- Keyword tracking for SEO agencies

- Local SEO audits and competitive research

- PPC ad monitoring for clients

Comparison Table: Oxylabs vs. Webshare vs. Decodo

| Feature | Oxylabs | Webshare | Decodo (Smartproxy) |

|---|---|---|---|

| Geo-targeting | ✅ City/ZIP | ✅ Country-level | ✅ City-level |

| CAPTCHA bypass | ✅ Built-in | ✅ Built-in | ✅ AI-assisted |

| Free trial | ✅ Yes | ✅ Yes | ✅ Yes |

| Speed & reliability | 🚀 Enterprise-grade | ⚡ Fast & stable | ⚡ Fast with AI parsing |

| Price range | 💰 Mid-tier | 💸 Affordable | 💸 Affordable |

| Proxy integration | ✅ Yes | ✅ Yes | ✅ Yes |

| Dev tools & support | 🛠️ SDK + 24/7 chat | 📚 Docs + email | 🛠️ Docs + dashboards + APIs |

Other Top SERP Scraper API Providers

1. SERPMaster

SERPMaster is a specialized tool built purely for scraping search engines. It offers a Google-only SERP API optimized for high-scale operations with minimal latency. Unlike more generalized scraping tools, SERPMaster’s core focus is delivering real-time organic search results, paid ads, and SERP features like featured snippets, people also ask boxes, and more.

It supports parameters for country, device type (desktop/mobile), language, and location. One of its major selling points is its simplicity — no overcomplication, just straightforward SERP data. It’s ideal for users who need to perform deep keyword tracking, run SEO software, or generate large volumes of search analytics.

With a robust infrastructure and automatic CAPTCHA-solving, SERPMaster helps digital marketers bypass traditional scraping headaches. Their flexible pricing model and solid documentation make it a great alternative for users who want to focus purely on Google SERP data without dealing with a more complex API stack.

2. SERPAPI

SERPAPI is one of the most well-known SERP scraping tools on the market. It supports a wide range of search engines, including Google, Bing, Yahoo, DuckDuckGo, Baidu, and even platforms like YouTube, Walmart, and eBay. It’s an excellent option for users who want a single API to handle all types of search result extraction.

SERPAPI goes beyond just delivering HTML or raw search data — it structures the response into clean, categorized JSON. For example, you can retrieve separate blocks for ads, knowledge graphs, FAQs, images, news, maps, and more. This structured approach is useful for developers and businesses integrating SERP insights into dashboards or analytics tools.

Its high concurrency, real-time speed, and generous free plan make it popular among startups and indie developers. SERPAPI also has official client libraries for Python, Node.js, and Ruby, reducing integration time. The platform’s vibrant documentation and community support give it extra points.

3. Apify

Apify is a broader web scraping platform that also offers a dedicated Google SERP Scraper Actor. While Apify isn’t purely a SERP API vendor, its flexibility makes it a great choice for technical teams who want custom workflows. You can configure Apify’s scraping actors to extract organic results, ads, people-also-ask sections, or anything on the SERP with precise targeting.

What sets Apify apart is its workflow automation, integration with headless browsers like Puppeteer and Playwright, and cloud-based processing. You can scrape SERP data and immediately feed it into crawlers, Google Sheets, or your own API endpoints.

Apify also provides serverless deployment and auto-scaling, making it a strong fit for users who want more than just keyword rank data. You can build your own “SERP workflows” and chain them with other APIs and integrations. It’s powerful, but may have a steeper learning curve for non-technical users.

4. Bright Data (formerly Luminati)

Bright Data is a premium proxy and data collection platform offering enterprise-grade solutions. Its SERP API is deeply integrated with its global residential, mobile, and datacenter proxy pool, which gives it unmatched flexibility for scraping across countries, regions, and devices.

Bright Data offers both synchronous and asynchronous API models, and it provides complete DOM rendering and browser emulation, which is ideal for dynamic SERPs and localized results. You can access organic listings, top stories, shopping results, and local map packs with pinpoint accuracy.

Although Bright Data is often pricier than competitors, it’s an ideal solution for large-scale data extraction projects requiring compliance, stability, and volume. Their legal framework is strict — ensuring ethical scraping — and their compliance-first approach gives enterprise customers peace of mind.

Their platform is rich in features, but you’ll need some technical skills or onboarding support to get the most out of it.

5. DataForSEO

DataForSEO is a data infrastructure provider offering rich APIs for keyword research, SERP data, rank tracking, and backlink profiles. Their SERP API is part of a larger suite that integrates with SEO, SEM, and PPC analysis tools.

It supports scraping across search engines like Google, Bing, Yahoo, and Yandex, and provides granular control over request parameters, including country, city, language, and device. You can extract data for organic listings, paid results, featured snippets, and SERP features such as “People Also Ask” or local packs.

One advantage of DataForSEO is its pay-as-you-go model — great for agencies and developers who don’t want long-term contracts. They also provide bulk SERP crawling, batch keyword support, and postback functionality for integration with CRMs and custom dashboards.

If you want precise, developer-friendly data feeds to power SEO tools or market research dashboards, DataForSEO is a top-tier contender.

6. ScraperAPI

ScraperAPI is a generalized web scraping platform that has added support for scraping search engines through customizable request headers and built-in proxy rotation. While not as laser-focused on SERP APIs as others, ScraperAPI makes up for it with scalability and ease of use.

Their infrastructure automatically handles CAPTCHAs, IP blocks, and location targeting. You can specify user agents, headers, and parsing options — great for scraping SERPs from desktop, mobile, or specific browsers.

It integrates well with Google SERPs and can be paired with parsing tools or custom scripts to extract clean JSON. Their pricing is competitive, and they offer robust usage tracking. While it doesn’t provide the rich SERP feature classification that others like SERPAPI do, it’s a solid foundation for those who want to build their own scraper logic using raw data responses.

ScraperAPI is perfect for developers who want to scale fast without getting tangled in proxy and CAPTCHA management.

FAQs: SERP Scraper API

Is SERP scraping legal?

SERP scraping is a legal gray area. While scraping publicly available data isn’t illegal in most countries, it can violate a website’s terms of service. Using compliant providers and avoiding personal data collection is essential.

Can I use a free SERP Scraper API?

Yes, some platforms like SERPAPI and Webshare offer free credits or trial plans. However, free plans usually have strict limits on volume, speed, and features. For commercial or high-volume use, paid plans are more reliable.

What search engines are typically supported?

Most providers focus on Google, but others offer support for Bing, Yahoo, Yandex, Baidu, DuckDuckGo, and even vertical engines like YouTube, Amazon, and eBay. Always check the API’s documentation for exact coverage.

How is a SERP API different from a proxy?

A proxy only gives you IP access to make your own requests, while a SERP API is a full-service solution that handles proxy rotation, CAPTCHA solving, geo-targeting, and parsing. APIs are simpler and more reliable.

Can SERP APIs track mobile vs. desktop results?

Yes, most modern SERP APIs allow you to choose the device type for the query. This helps simulate real-world scenarios since Google’s mobile and desktop rankings can differ significantly.

Use Cases by Industry

🔍 SEO Agencies

Track thousands of keywords across regions with daily updates. Automate client SERP reports and rankings with ease.

🛒 E-commerce

Monitor how products appear in Shopping results. Benchmark against competitors on a weekly basis.

📰 News Monitoring

Use SERP APIs to monitor trending topics, featured snippets, and news carousel placements in real time.

📊 Data Analytics & Research

Feed structured SERP data into dashboards, ML models, or research reports. Perfect for trend spotting and predictive analysis.

Final Thoughts: Picking the Right SERP API

When choosing a SERP Scraper API, the key is to match the scale of your project, budget, and desired features.

If you’re an enterprise or platform provider, go with Oxylabs for its battle-tested infrastructure and ultra-reliable delivery.

If you need affordability and simplicity, Webshare delivers strong value without overcomplicating things.

And if you want a versatile, smart engine with full parsing capability, Decodo (Smartproxy) is a worthy addition to your stack.

All three providers have earned their place in the SERP API elite — now it’s just a matter of choosing the right ally for your data mission.

Ready to Scrape Smarter?

Choose your SERP scraper wisely, automate your data flow, and dominate your niche — with the power of Oxylabs, Webshare, and Decodo behind you.

👉 Visit SecureBlitz for more tutorials, reviews, and exclusive affiliate offers from top proxy and scraping brands.

INTERESTING POSTS

- How To Scrape SERPs To Optimize For Search Intent

- YouTube Scraper: The Ultimate Guide To Extracting Video Data At Scale

- Best Web Scraper APIs: Unlocking the Web’s Data

- Google Scraper: How to Ethically and Efficiently Extract Search Data

- Amazon Scraper API: Best Tools To Extract Data From Amazon At Scale

- YouTube Scraper API: Guide for Developers, Marketers & Data Analysts

About the Author:

Meet Angela Daniel, an esteemed cybersecurity expert and the Associate Editor at SecureBlitz. With a profound understanding of the digital security landscape, Angela is dedicated to sharing her wealth of knowledge with readers. Her insightful articles delve into the intricacies of cybersecurity, offering a beacon of understanding in the ever-evolving realm of online safety.

Angela's expertise is grounded in a passion for staying at the forefront of emerging threats and protective measures. Her commitment to empowering individuals and organizations with the tools and insights to safeguard their digital presence is unwavering.