In this post, I will answer the question – what is a proxy scraper? Also, I will show you the 10 best proxy scraping tools.

Proxy servers have become an essential tool for many internet users and businesses. They offer benefits like increased privacy, bypassing geo-restrictions, load balancing, and more. However, finding reliable proxy servers can be challenging.

This is where proxy scrapers come in. In this comprehensive guide, we’ll explore what proxy scrapers are, how they work, and their benefits and limitations, as well as review some of the best proxy scraping tools available.

Table of Contents

What is a Proxy Scraper?

A proxy scraper is a tool or software designed to automatically collect and verify proxy server addresses from various sources on the internet.

These tools scan websites, forums, and other online resources that list publicly available proxy servers. They then compile this information into a usable list of proxy IPs and ports.

Proxy scrapers serve a crucial role in the proxy ecosystem by:

- Discovering new proxy servers

- Verifying the functionality of existing proxies

- Categorizing proxies based on type (HTTP, HTTPS, SOCKS4, SOCKS5)

- Checking proxy anonymity levels

- Determining the geographical location of proxies

- Measuring proxy speed and latency

By automating the process of finding and testing proxies, these tools save users significant time and effort compared to manually searching for and verifying proxy servers.

Best Featured Proxy Service Providers

When it comes to premium proxy solutions, some providers stand out for their unique strengths, innovative features, and reliability. Below, we highlight three top-tier proxy services—Oxylabs, Decodo (formerly Smartproxy), and Webshare—each excelling in different areas to meet diverse web scraping and data collection needs.

1. Oxylabs – Best for Enterprise-Grade Data Extraction

Oxylabs is a powerhouse in the proxy industry, offering enterprise-level solutions with extensive proxy pools and AI-driven data collection tools. With millions of residential and datacenter proxies, Oxylabs provides unmatched scalability and reliability for businesses requiring large-scale web scraping and market research.

Key Features:

✅ 175M+ ethically sourced residential proxies

✅ AI-powered proxy management for optimal performance

✅ 99.9% uptime with industry-leading security

✅ Dedicated account management for enterprise clients

Best For: Enterprises, data analysts, and businesses handling high-volume scraping projects.

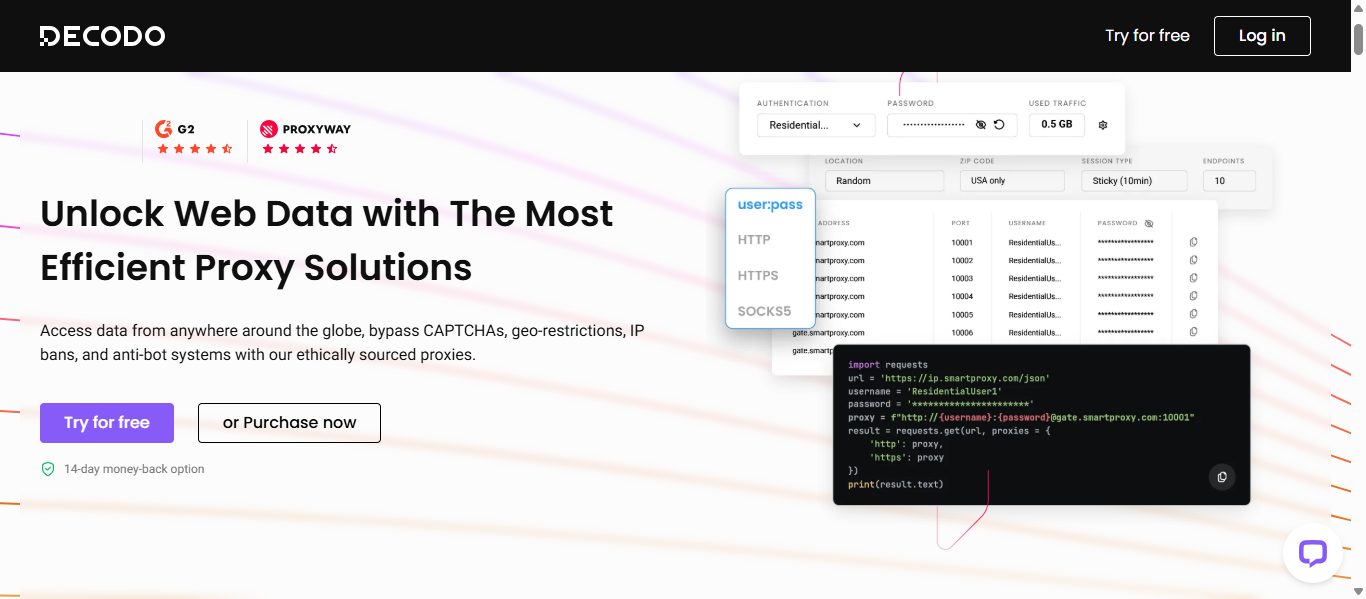

2. Decodo – Best for User-Friendly and Affordable Proxies

Decodo (formerly Smartproxy) delivers a seamless experience with easy-to-use proxy management tools and an impressive 115M+ IP global proxy pool, making it an excellent choice for those looking for affordability without compromising quality.

With residential proxies spanning 195+ locations, a user-friendly dashboard, and fast customer support, Decodo is a go-to solution for marketers, SEO specialists, and businesses of all sizes.

Key Features:

✅ 65M+ proxies, including 55M+ residential IPs 🌎

✅ <0.3s avg speed ⚡ and 99.99% uptime 🔄

✅ Automatic rotation to prevent IP bans 🔄

✅ Supports HTTPs & SOCKS5 for maximum compatibility 🔗

✅ Fast customer support – 40s average response time ⏳

✅ Easy setup & free trials on all products 🎉

🔥 Limited-Time Offer: Get 40% off Residential Proxies with code RESI40 🏷️

🚀 Try for Free: Enjoy free trials on all other proxy products today!

Best For: Marketers, freelancers, businesses, and web scraping professionals needing reliable and cost-effective proxy solutions.

Best Proxy Service Deals

How Do Proxy Scrapers Work?

Proxy scrapers typically follow a multi-step process to collect and verify proxy information:

a) Source Identification: The scraper starts by identifying potential sources of proxy lists. These may include:

- Public proxy websites

- Forum posts

- GitHub repositories

- Social media platforms

- Other online databases

b) Data Extraction: The tool extracts relevant information from these sources using web scraping techniques. This usually includes the proxy IP address, port number, and sometimes additional details like proxy type or location.

c) Parsing and Formatting: The extracted data is then parsed and formatted into a standardized structure for easier processing and storage.

d) Deduplication: The scraper removes duplicate entries to ensure a clean, unique list of proxies.

e) Verification: This crucial step involves testing each proxy to confirm its functionality. The scraper may:

- Attempt to connect to the proxy

- Send test requests through the proxy to check its ability to route traffic

- Measure response times and latency

- Determine the proxy’s anonymity level

- Identify the proxy’s geographical location

f) Categorization: The scraper categorizes the proxies based on the verification results. This may include sorting by:

- Protocol (HTTP, HTTPS, SOCKS4, SOCKS5)

- Anonymity level (transparent, anonymous, elite)

- Speed (fast, medium, slow)

- Geographical location

g) Storage and Export: Finally, the verified and categorized proxy list is stored in a database or exported to a file format like CSV, JSON, or TXT for user access.

Many proxy scrapers run this process continuously or at regular intervals to maintain an up-to-date list of working proxies.

Benefits of Using Proxy Scrapers

Proxy scrapers offer several advantages for individuals and businesses that rely on proxy servers:

a) Time-saving: Manually finding and testing proxies is extremely time-consuming. Proxy scrapers automate this process, allowing users to access large lists of working proxies quickly.

b) Increased Efficiency: By providing pre-verified proxies, these tools help users avoid the frustration of trying non-functional servers.

c) Cost-effective: Many proxy scrapers are free or inexpensive compared to paid proxy services, making them an attractive option for budget-conscious users.

d) Access to a Diverse Proxy Pool: Scrapers can discover various proxies from different locations and with various characteristics, giving users more options to suit their specific needs.

e) Real-time Updates: Some proxy scrapers continuously update their lists, ensuring users can access the most current and functional proxies.

f) Customization: Advanced proxy scrapers allow users to filter and sort proxies based on specific criteria like speed, location, or anonymity level.

g) Integration Capabilities: Many scraping tools offer APIs or export options, making integrating proxy lists into other applications or workflows easy.

Limitations and Challenges of Proxy Scraping

While proxy scrapers can be incredibly useful, they also come with certain limitations and challenges:

a) Reliability Issues: Free public proxies found by scrapers are often unreliable, with frequent downtime or slow speeds.

b) Short Lifespan: Public proxies tend to have a short lifespan as they quickly become overused or blocked by websites.

c) Security Risks: Public proxies can pose security risks, as some may be operated by malicious actors looking to intercept user data.

d) Limited Anonymity: Many free proxies offer limited anonymity and may not adequately protect user privacy.

e) Blocking and Detection: Websites are increasingly implementing measures to detect and block traffic from known proxy IPs, making scraped proxies less effective for specific use cases.

f) Legal and Ethical Concerns: The legality and ethics of scraping proxy information from various sources can be questionable in some jurisdictions.

g) Maintenance: Proxy lists require constant updating and verification to remain useful, which can be resource-intensive.

h) Quality Variation: The quality and performance of scraped proxies can vary greatly, requiring additional filtering and testing by the end user.

Legal and Ethical Considerations

When using proxy scrapers, it’s important to be aware of potential legal and ethical issues:

a) Terms of Service: Scraping proxy information from websites may violate their terms of service or acceptable use policies.

b) Copyright Concerns: In some cases, lists of proxy servers might be considered copyrighted information, making scraping and redistribution problematic.

c) Server Load: Aggressive scraping can load source websites unnecessarily, potentially disrupting their services.

d) Privacy Issues: Some proxy lists may include servers not intended for public use, raising privacy concerns for the proxy owners.

e) Jurisdictional Differences: The legality of web scraping and proxy usage can vary between countries and regions.

f) Intended Use: While proxy scrapers are generally legal, the intended use of the proxies may fall into legal gray areas or be outright illegal in some cases.

Users should always research the legal implications in their jurisdiction and consider the ethical aspects of using scraped proxy lists.

10 Best Proxy Scraping Tools

Now that we understand what proxy scrapers are and how they work, let’s review some of the best tools available for proxy scraping:

1. Geonode

Geonode is a comprehensive proxy solution that includes a powerful proxy scraper. It offers both residential and datacenter proxies with advanced filtering options.

Key Features:

- Real-time proxy scraping and verification

- Extensive geographical coverage

- Advanced filtering (country, city, ASN, provider)

- API access for developers

- Proxy rotation and load balancing

Pros:

- High-quality proxies with good reliability

- Excellent documentation and support

- Flexible pricing plans

Cons:

- More expensive than some alternatives

- Requires a learning curve for advanced features

2. Hidemy.name Proxy Scraper

Hidemy.name offers a free proxy scraper tool as part of its broader VPN and proxy services. It provides a substantial list of free proxies with various filtering options.

Key Features:

- Supports HTTP, HTTPS, and SOCKS proxies

- Filtering by country, port, protocol, and anonymity level

- Displays proxy speed and uptime

- Regular updates

Pros:

- User-friendly interface

- Good variety of proxy types and locations

- Free to use

Cons:

- No API for automated scraping

- Limited to web interface only

- Proxy quality can vary

3. Live Proxies

Live Proxies provides high-performance rotating and static proxies tailored for seamless web scraping. With a vast pool of residential and mobile IPs, Live Proxies ensures low detection rates, high-speed connections, and global coverage, making it an excellent choice for businesses and individuals conducting large-scale data extraction.

Key Features

- Rotating Residential & Mobile Proxies: Ensures frequent IP changes to avoid bans and blocks.

- Sticky Sessions: Allows users to maintain the same IP for up to 60 minutes, ideal for session-based scraping.

- Global IP Coverage: Provides geolocation targeting with IPs from multiple countries.

- High-Speed & Low Latency: Optimized for fast data retrieval and large-scale scraping operations.

- User-Friendly Dashboard: Simple proxy management, usage tracking, and easy integration with automation tools.

Pros

- Wide variety of proxy types to suit different needs.

- Customizable plans for flexibility.

- High anonymity and reliable performance.

- Responsive and helpful customer support.

Cons

- Limited location options compared to some competitors.

- Some advanced features may cater more to enterprise needs.

Live Proxies is an excellent web scraping solution for professionals looking for stable, fast, and undetectable proxies. Their secure infrastructure and flexible IP rotation make them a top-tier choice for ad verification, price monitoring, SEO research, and market intelligence.

==>> Get Live Proxies

4. ProxyScrape

ProxyScrape is a popular and user-friendly proxy scraping tool offering free and premium services. It provides HTTP, HTTPS, and SOCKS proxies with various filtering options.

Key Features:

- Regular updates (every 5 minutes for premium users)

- API access for easy integration

- Proxy checking and verification

- Country and anonymity filtering

- Support for multiple proxy protocols

Pros:

- Large proxy pool with frequent updates

- Easy-to-use interface

- Reliable proxy verification

Cons:

- The free version has limitations on proxy numbers and update frequency

- Some users report inconsistent speeds with free proxies

5. ProxyNova

ProxyNova is a free proxy scraper and checker that provides a regularly updated list of proxy servers worldwide.

Key Features:

- Daily updates of proxy lists

- Country-based filtering

- Proxy speed and uptime information

- Simple, no-frills interface

Pros:

- Completely free to use

- Easy to navigate and understand

- Provides additional proxy server information

Cons:

- Limited features compared to paid options

- No API access

- Proxy quality can be inconsistent

6. Proxy-List.download

Proxy-List.download is a simple yet effective proxy scraper that offers free proxy lists in various formats.

Key Features:

- Multiple proxy protocols (HTTP, HTTPS, SOCKS4, SOCKS5)

- Country and anonymity filtering

- Various download formats (TXT, JSON, CSV)

- Regular updates

Pros:

- Easy to use with no registration required

- Supports multiple export formats

- Allows direct download of proxy lists

Cons:

- Basic interface with limited features

- No API access

- Proxy reliability can be inconsistent

7. Spys.one

Spys.one is a comprehensive proxy scraper and checker that provides detailed information about each proxy server.

Key Features:

- Extensive proxy details (anonymity, country, uptime, speed)

- Support for multiple proxy types

- Advanced filtering options

- Real-time proxy checking

Pros:

- Provides in-depth information about each proxy

- Regular updates

- Free to use

Cons:

- The interface can be overwhelming for beginners

- No direct API access

- Ads can be intrusive

8. Free Proxy List

Free Proxy List is a straightforward proxy scraper with a clean, easy-to-use interface for finding free proxies.

Key Features:

- Hourly updates

- Filtering by anonymity, country, and port

- HTTPS and Google-passed proxies

- Simple export functionality

Pros:

- Clean, user-friendly interface

- Frequent updates

- Easy export to CSV

Cons:

- Limited to HTTP/HTTPS proxies

- No API access

- Basic feature set

9. SSL Proxies

SSL Proxies specializes in providing a list of HTTPS (SSL) proxies, which are particularly useful for secure connections.

Key Features:

- Focus on HTTPS proxies

- Country and anonymity filtering

- Uptime and response time information

- Regular updates

Pros:

- Specialized in secure HTTPS proxies

- Simple, easy-to-use interface

- Free to use

Cons:

- Limited to HTTPS proxies only

- No advanced features or API

- Proxy quality can be variable

10. Proxy Scrape API

Proxy Scrape API is a developer-focused tool that allows programmatic access to scraped proxy lists.

Key Features:

- RESTful API for easy integration

- Support for multiple proxy protocols

- Customizable proxy attributes (anonymity, country, timeout)

- Regular updates and proxy verification

Pros:

- Ideal for developers and automated systems

- Flexible API with good documentation

- Offers both free and paid plans

Cons:

- Requires programming knowledge to use effectively

- The free plan has usage limitations

- No web interface for manual browsing

11. ProxyDB

ProxyDB is a comprehensive proxy database with a scraper to keep its lists up-to-date.

Key Features:

- Large database of proxies

- Multiple filtering options (protocol, country, port)

- Proxy testing and verification

- API access available

Pros:

- Extensive proxy database

- Regular updates and verifications

- Offers both web interface and API access

Cons:

- Some features require a paid subscription

- Interface can be complex for beginners

- Proxy quality varies

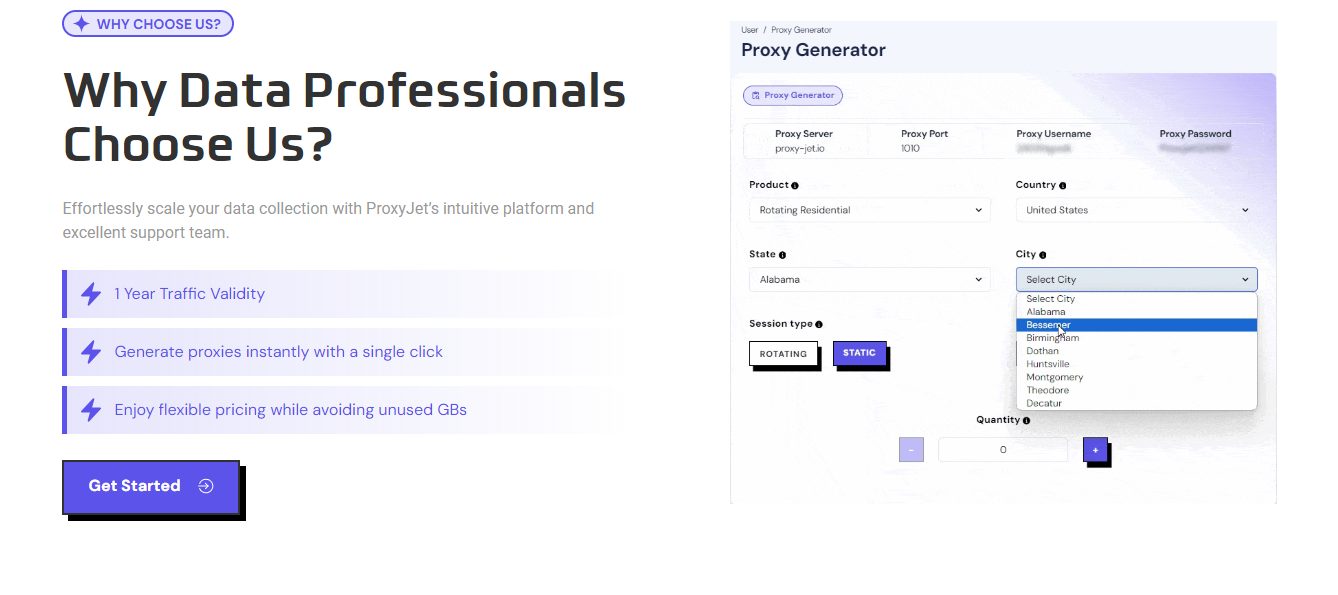

Why ProxyJet is the Go-To Choice for Scraping Proxies

When it comes to scraping proxies, reliability, speed, and consistent performance are key. This is where ProxyJet truly excels.

Designed with advanced scraping capabilities, ProxyJet ensures you always have access to a vast pool of high-quality proxies, significantly reducing the time and effort involved in manually sourcing them.

Its automated scraping feature allows users to gather fresh and reliable proxies from multiple sources with minimal intervention, making it the ideal solution for beginners and seasoned developers alike.

What sets ProxyJet apart is its commitment to maintaining a balance between speed and security. The platform not only scrapes proxies at lightning speed but also filters out low-quality or compromised proxies, ensuring that you always have access to the most secure and functional options.

With ProxyJet, you don’t just get quantity—you get quality, making it a standout choice for anyone serious about web scraping or proxy management.

==>> Get ProxyJet

How to Choose the Right Proxy Scraper

Selecting the most suitable proxy scraper depends on your specific needs and use case. Consider the following factors:

a) Proxy Quality: Look for scrapers that provide reliable, fast proxies with good uptime.

b) Update Frequency: Choose a tool that updates its proxy list regularly to ensure you always have access to working proxies.

c) Proxy Types: Ensure the scraper supports the proxy protocols you need (HTTP, HTTPS, SOCKS4, SOCKS5).

d) Geographical Diversity: If you need proxies from specific locations, check that the scraper offers adequate geographical coverage.

e) Filtering Options: Advanced filtering capabilities can help you find proxies matching your requirements.

f) Ease of Use: Consider the user interface and learning curve, especially if you’re new to proxy scraping.

g) Integration Capabilities: If you need to integrate proxy lists into other tools or workflows, look for scrapers with API access or export options.

h) Price: Evaluate the cost-effectiveness of paid options against your budget and needs.

i) Support and Documentation: Good customer support and comprehensive documentation can be crucial, especially for more complex tools.

j) Legal Compliance: Ensure the scraper operates within legal boundaries and respects website terms of service.

Best Practices for Using Proxy Scrapers

To get the most out of proxy scrapers while minimizing risks, follow these best practices:

a) Verify Proxies: Always test scraped proxies before using them in critical applications.

b) Rotate Proxies: Use proxy rotation to distribute requests and avoid overusing individual proxies.

c) Respect Rate Limits: Be mindful of the scraper’s rate limits and those of the websites you’re accessing through proxies.

d) Use Ethically: Avoid using scraped proxies for illegal or unethical activities.

e) Combine with Other Tools: Use proxy scrapers, proxy checkers, and managers for better results.

f) Keep Lists Updated: Regularly refresh your proxy lists to maintain a pool of working proxies.

g) Implement Error Handling: Implement robust error handling to manage proxy failures gracefully when using proxies programmatically.

h) Monitor Performance: Keep track of proxy performance and remove underperforming or blocked proxies from your list.

i) Diversify Sources: Use multiple proxy scrapers to build a more diverse and reliable proxy pool.

j) Understand Limitations: Be aware of the limitations of free public proxies and adjust your expectations accordingly.

The Future of Proxy Scraping

The landscape of proxy scraping is continually evolving, driven by technological advancements and changing internet dynamics. Here are some trends and predictions for the future of proxy scraping:

a) AI and Machine Learning Integration: Expect more sophisticated proxy scrapers leveraging AI and machine learning for better proxy discovery, verification, and categorization.

b) Increased Focus on Privacy: As privacy concerns grow, proxy scrapers may emphasize finding and verifying truly anonymous proxies.

c) Blockchain and Decentralized Proxies: The emergence of blockchain-based and decentralized proxy networks could provide new sources for proxy scrapers.

d) IoT Device Proxies: With the proliferation of Internet of Things (IoT) devices, we may see proxy scrapers tapping into this vast network of potential proxy sources.

e) Stricter Regulations: Increased scrutiny of web scraping practices could lead to more regulations affecting proxy scraping activities.

f) Advanced Geolocation Features: Proxy scrapers may offer more precise geolocation options, allowing users to find proxies from specific cities or regions.

g) Integration with VPN Services: We might see closer integration between proxy scrapers and VPN services, offering users more comprehensive privacy solutions.

h) Improved Real-time Verification: Advancements in verification technologies could lead to more accurate and up-to-date proxy lists.

i) Specialization: Some proxy scrapers may specialize in finding proxies for specific use cases, such as social media automation or e-commerce.

j) Enhanced Mobile Support: As mobile internet usage grows, proxy scrapers may focus more on finding and verifying mobile-friendly proxies.

Conclusion

Proxy scrapers play a vital role in the proxy ecosystem, providing users access to various proxy servers for various applications.

While they offer significant benefits regarding time-saving and cost-effectiveness, users must know the limitations and potential risks associated with scraped proxies.

By choosing the right proxy scraping tool and following best practices, users can effectively leverage these tools to enhance their online privacy, bypass geo-restrictions, or manage complex web scraping tasks.

As the internet landscape evolves, proxy scrapers will likely adapt and improve, offering even more sophisticated features and better-quality proxy lists.

Remember always to use proxy scrapers and the resulting proxy lists responsibly and ethically. Stay informed about the legal implications in your jurisdiction, and respect the terms of service of websites you access through proxies.

INTERESTING POSTS

About the Author:

Meet Angela Daniel, an esteemed cybersecurity expert and the Associate Editor at SecureBlitz. With a profound understanding of the digital security landscape, Angela is dedicated to sharing her wealth of knowledge with readers. Her insightful articles delve into the intricacies of cybersecurity, offering a beacon of understanding in the ever-evolving realm of online safety.

Angela's expertise is grounded in a passion for staying at the forefront of emerging threats and protective measures. Her commitment to empowering individuals and organizations with the tools and insights to safeguard their digital presence is unwavering.