Learn how to scrape any website into Markdown in 2025 using Python, Playwright, BeautifulSoup, and proxies.

Scraping a website and converting it into Markdown (.md) has become a powerful workflow for developers, writers, researchers, archivists, and AI engineers.

Why Markdown?

- It’s portable

- It’s lightweight

- It’s readable by humans and machines

- It’s perfect for blogs, GitHub wikis, documentation, AI training datasets, and static site generators

Today, you’ll learn the exact process to scrape any website to Markdown in 2025 — clean, structured, automated, and scalable.

You’ll also get a complete Python script that extracts:

- Titles

- Subheadings

- Paragraphs

- Images

- Links

- Code blocks

- Lists

- Tables

…and converts all of it into clean Markdown automatically.

Let’s begin.

Table of Contents

Why Scrape Websites to Markdown? (2025 Use Cases)

Markdown extraction is now used across:

1️⃣ Technical Documentation

Developers export website docs into Markdown to host them locally or on GitHub.

2️⃣ Personal Knowledge Bases

Obsidian, Notion, Logseq users import web content to build knowledge graphs.

3️⃣ AI Knowledge Training

Markdown is the preferred format for vector embedding pipelines.

4️⃣ SEO & Content Research

Scraping competitor articles into Markdown for side-by-side analysis.

5️⃣ Static Site Generators

Jekyll, Hugo, Astro, Next.js — all rely on .md content.

6️⃣ Web Archival & Backup

Store entire websites offline, version-controlled, machine-readable.

You’re not just “scraping” — you’re building portable, structured, future-proof knowledge.

Is It Legal to Scrape Websites? (Important)

Website scraping is legal if you follow these rules:

- Scrape only publicly accessible content

- Respect robots.txt where required

- Never bypass logins or paywalls

- Do not scrape personal/private user data

- Use proxies to avoid accidental blocks

- Respect rate limits

- Attribute and comply with content licenses

This guide teaches legitimate, ethical scraping only.

Why Proxies Are Necessary for Safe Website Scraping?

Websites have become much stricter:

- Cloudflare

- Akamai

- PerimeterX

- DataDome

- FingerprintJS

are blocking bots aggressively.

You need rotating IPs to avoid:

- 429 Too Many Requests

- 403 Forbidden

- CAPTCHA challenges

- IP blacklisting

Recommended Proxy Choices for Markdown Scraping

1️⃣ Decodo – Best balance of price + success rate

2️⃣ Oxylabs – Enterprise-level pools

3️⃣ Webshare – Cheapest for small jobs

4️⃣ IPRoyal – Stable residential & mobile proxies

5️⃣ Mars Proxies – Niche eCommerce and social automation

For production workloads, Decodo residential proxies consistently perform well with JavaScript-heavy sites and allow for unlimited scraping volume.

How to Scrape Any Website to Markdown: Complete Process Overview

Here’s the high-level pipeline:

1. Fetch the webpage HTML

Using Playwright for JS-rendered sites or requests for simple HTML pages.

2. Parse the content

With BeautifulSoup or the Playwright DOM.

3. Extract text and structure

Headings, paragraphs, lists, images, etc.

4. Convert to Markdown

Using a Markdown converter or your own mapper.

5. Save to .md file

Organized by slug or title.

6. (Optional) Bulk scrape + bulk export

Now let’s dive into the real implementation.

Tools You Need (2025 Stack)

- Python 3.10+

- Playwright (for dynamic websites)

- BeautifulSoup4

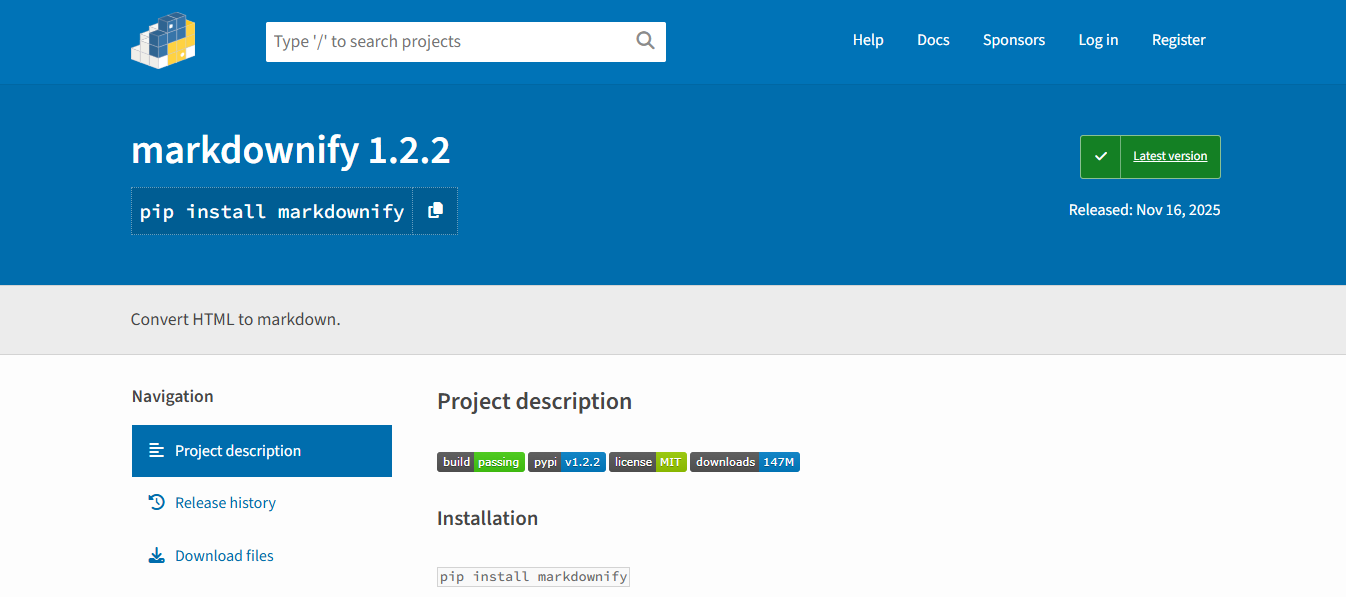

- markdownify (HTML → Markdown converter)

- Proxies (Decodo or others)

Install packages:

pip install playwright

pip install beautifulsoup4

pip install markdownify

pip install requests

playwright install

Full Python Script to Scrape a Website to Markdown

(JS-rendered websites supported)

This script handles:

- Headless rendering

- Proxies

- Image downloading

- Markdown conversion

- Automatic file naming

- Cleaning unwanted boilerplate

📌 Python Code

import os

import time

import requests

from bs4 import BeautifulSoup

from markdownify import markdownify as md

from playwright.sync_api import sync_playwright

# -------------------------------------------------------

# 1. CONFIGURATION

# -------------------------------------------------------

PROXY_URL = "http://user:pass@gw.decodo.io:12345" # Replace with your proxy

SAVE_IMAGES = True

OUTPUT_FOLDER = "markdown_export"

os.makedirs(OUTPUT_FOLDER, exist_ok=True)

# -------------------------------------------------------

# 2. DOWNLOAD IMAGE

# -------------------------------------------------------

def download_image(img_url, folder):

try:

if not img_url.startswith("http"):

return None

filename = img_url.split("/")[-1].split("?")[0]

path = f"{folder}/{filename}"

img_data = requests.get(img_url, timeout=10).content

with open(path, "wb") as f:

f.write(img_data)

return path

except:

return None

# -------------------------------------------------------

# 3. SCRAPE WEBSITE USING PLAYWRIGHT

# -------------------------------------------------------

def fetch_html(url):

with sync_playwright() as p:

browser = p.firefox.launch(headless=True)

context = browser.new_context(

proxy={"server": PROXY_URL} # proxy integration

)

page = context.new_page()

page.goto(url, timeout=60000)

time.sleep(5) # allow JS to render fully

html = page.content()

browser.close()

return html

# -------------------------------------------------------

# 4. CONVERT WEBSITE TO MARKDOWN

# -------------------------------------------------------

def scrape_to_markdown(url):

html = fetch_html(url)

soup = BeautifulSoup(html, "html.parser")

# Remove scripts, ads, navbars, footers

for tag in soup(["script", "style", "footer", "nav"]):

tag.decompose()

# Extract Title

title = soup.title.string if soup.title else "untitled"

slug = title.lower().replace(" ", "-").replace("|", "").replace("/", "-")

# Extract Main Content

body = soup.find("body")

content_html = str(body)

# Convert to markdown

markdown_text = md(content_html, heading_style="ATX")

# Save images

if SAVE_IMAGES:

img_tags = soup.find_all("img")

img_folder = f"{OUTPUT_FOLDER}/{slug}_images"

os.makedirs(img_folder, exist_ok=True)

for img in img_tags:

src = img.get("src")

img_path = download_image(src, img_folder)

if img_path:

markdown_text = markdown_text.replace(src, img_path)

# Save markdown file

md_path = f"{OUTPUT_FOLDER}/{slug}.md"

with open(md_path, "w", encoding="utf-8") as f:

f.write(f"# {title}\n\n")

f.write(markdown_text)

return md_path

# -------------------------------------------------------

# USAGE

# -------------------------------------------------------

url = "https://example.com"

file_path = scrape_to_markdown(url)

print("Markdown saved to:", file_path)

How This Script Works (Explained Simply)

1. Playwright loads the page

Even sites protected by JavaScript render normally.

2. HTML is passed to BeautifulSoup

Which strips out unwanted boilerplate (ads, nav, scripts).

3. markdownify converts HTML to Markdown

Keeping structure like:

# H1## H2- lists1. ordered lists

4. Images are downloaded and relinked

Your Markdown becomes fully offline-ready.

5. A clean .md file is saved

Handling Sites With Heavy Protection (Cloudflare, Akamai, etc.)

Many modern websites deploy strong bot protection.

To bypass these safely and legally, you need:

- Human-like browser automation (Playwright)

- Strong residential proxies (Decodo, IPRoyal, Oxylabs)

- Delay simulation (2–4 seconds)

- Random scroll simulation

- Dynamic headers

You can add human scrolling:

page.mouse.wheel(0, 5000)

page.wait_for_timeout(1500)

And rotate user agents:

context = browser.new_context(

user_agent="Mozilla/5.0 ..."

)

Bulk Scraping: Converting Multiple URLs Into Markdown

You can process entire lists:

urls = [

"https://example.com/docs",

"https://example.org/article",

"https://example.net/page",

]

for u in urls:

print(scrape_to_markdown(u))

This allows:

- Full website archiving

- One-click conversion of 100+ pages

- Competitive research automation

- SEO content analysis

AI + Markdown: The Future Workflow

Markdown works perfectly with:

- LLM fine-tuning datasets

- RAG pipelines

- Embedding databases

- Vector search

- Chatbot knowledge bases

Because Markdown is:

- Clean

- Structured

- Lightweight

- Hierarchical

- Easy to parse

Increasingly, tech companies are opting for Markdown for AI knowledge ingestion.

When to Use Proxies in Markdown Scraping

Use proxies when a site:

- Blocks your country

- Has strong rate limits

- Needs rotating fingerprints

- Uses anti-bot filtering

- Bans datacenter IPs

Best Proxy Providers (2025)

1. Decodo

Best for automated scraping + unlimited bandwidth

- Strong global residential pool

- API key authorization

- High success rate on JS websites

2. Oxylabs

Premium large-scale option

- Enterprise volume

- High performance

3. Webshare

Best for budget scraping

- Cheap rotating IP

- Great for personal projects

4. Mars Proxies

Good for social media & ecommerce tasks

5. IPRoyal

Stable rotating residential & mobile proxies

Recommendation: For most users, Decodo residential proxies are the sweet spot between power, price, and anti-block success rate.

Best Practices for Clean Markdown Extraction

1. Remove scripts and styles

3. Keep Markdown minimalistic

4. Store images locally

5. Normalize headings (H1 → H6)

6. Avoid duplicate content

7. Keep URLs absolute

Real-World Examples of Markdown Scraping

📌 GitHub Wiki Migration

Convert old HTML docs into Markdown for GitHub wikis.

📌 Knowledge Base Creation

Turn 100+ blog posts into an Obsidian vault.

📌 SEO Competitor Research

Scrape top-ranking articles to analyze structure, keywords, and topical depth.

📌 AI Dataset Creation

Feed Markdown into embedding pipelines for semantic search.

📌 Offline Archival

Save entire websites into Markdown folders for reference.

Frequently Asked Questions About Scraping a Website to Markdown

What does it mean to scrape a website to Markdown?

Scraping a website to Markdown means extracting the content of a website—such as headings, paragraphs, lists, tables, and images—and converting it into Markdown (.md) format. Markdown is a lightweight, readable, and easily usable format for documentation, blogs, AI datasets, and knowledge bases.

What tools do I need to scrape a website and convert it to Markdown in 2025?

The most commonly used tools include Python, Playwright or Selenium for dynamic content, BeautifulSoup for parsing HTML, and markdownify to convert HTML to Markdown. Additionally, proxies like Decodo help you scrape at scale without getting blocked.

Can I scrape any website into Markdown?

Technically, most public websites can be scraped into Markdown; however, it is advisable to avoid scraping private content, login-protected pages, and sites with strict terms of service. Always check a website’s robots.txt and scraping policies before extraction.

How do I handle images when scraping to Markdown?

Images can be downloaded locally and referenced in your Markdown file. Using scripts, you can automatically fetch image URLs, save them to a folder, and update the Markdown links so your content is fully offline-ready.

Do I need proxies for scraping websites into Markdown?

Yes, proxies are highly recommended, especially for scraping large websites or sites protected by anti-bot systems. Residential proxies like Decodo or IPRoyal provide real IP addresses that reduce the chance of blocks and CAPTCHAs.

Is it legal to scrape a website to Markdown?

Scraping public content for personal, research, or internal use is generally legal. Avoid scraping private data, bypassing logins, or using the scraped content commercially in a manner that violates copyright. Always respect a site’s terms of service and applicable laws.

Can I automate scraping multiple pages into Markdown?

Absolutely. You can create a script that loops through multiple URLs, scrapes each page, and saves them as individual Markdown files. This workflow is ideal for knowledge base migrations, content analysis, or SEO research.

Conclusion

Scraping a website into Markdown unlocks powerful workflows across research, SEO, development, documentation, and AI data pipelines.

With Playwright, Python, BeautifulSoup, and Markdownify — plus rotating residential proxies from providers like Decodo — you can convert any website into clean, portable .md files ready for automation or analysis.

Whether you want to archive pages, study competitors, migrate CMS content, or feed AI systems with structured datasets, scraping to Markdown is one of the most efficient and future-proof methods available today.

INTERESTING POSTS

- How To Scrape SERPs To Optimize For Search Intent

- 4 Reasons You Need Content Filtering For Your Business

- The Advantages Of Mobile Proxies

- Online Privacy – Why It’s Important And How To Protect It

- Top Proxy Service Providers in 2025: Unlocking Internet Freedom

About the Author:

Meet Angela Daniel, an esteemed cybersecurity expert and the Associate Editor at SecureBlitz. With a profound understanding of the digital security landscape, Angela is dedicated to sharing her wealth of knowledge with readers. Her insightful articles delve into the intricacies of cybersecurity, offering a beacon of understanding in the ever-evolving realm of online safety.

Angela's expertise is grounded in a passion for staying at the forefront of emerging threats and protective measures. Her commitment to empowering individuals and organizations with the tools and insights to safeguard their digital presence is unwavering.